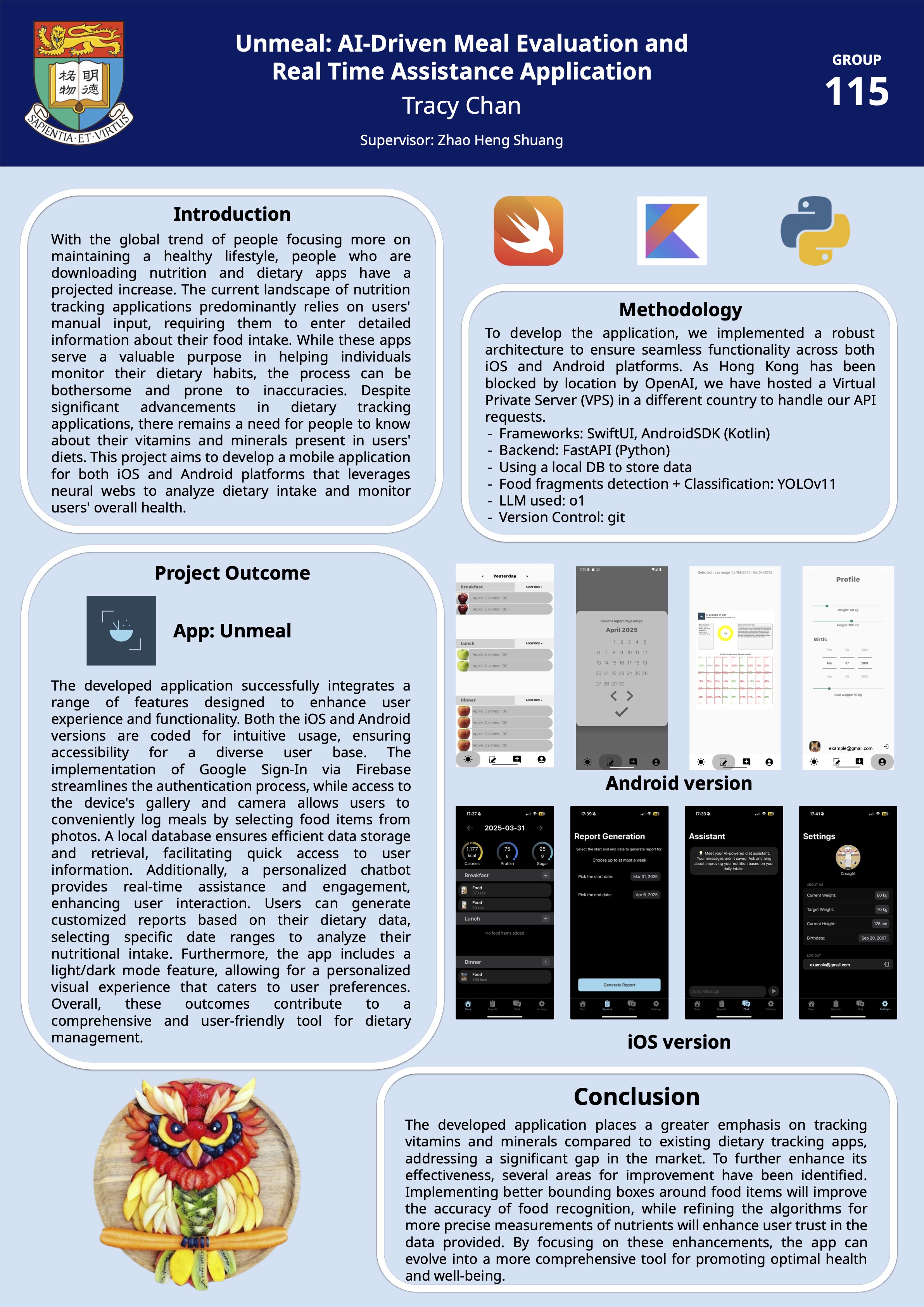

Micronutrient deficiencies are a pervasive yet often unrecognized global health issue, affecting an estimated one-third of the world’s population without obvious clinical symptoms. Conventional dietary assessment methods, such as manual food logging or sensor‑dependent imaging, both impose a substantial user burden or require specialized hardware, limiting widespread adoption. This report describes the design, implementation, and evaluation of a mobile platform that integrates lightweight computer vision preprocessing with large language model services to deliver real‑time, personalized nutrient feedback from standard smartphone images. We employ YOLOv11 for preliminary food‑group classification to minimize token usage in GPT‑4o API calls, coupled with native iOS (Swift) and Android (Kotlin) frontends, a Python‑based Docker‑containerized backend, local persistence via Swift Data and Room, and Firebase Authentication for secure user management. User interfaces including diary, report, and conversational chatbot pages, provide intuitive workflows for capturing meal data, visualizing intake against individualized targets, and receiving tailored dietary insights. Preliminary system deployment demonstrates the feasibility of a cost‑effective, hardware‑agnostic approach, although the current classification model’s limited taxonomy constrains content coverage. Future enhancements will target comprehensive food recognition, depth‑based portion estimation, and integration with external health data sources.