Language-model based recommender algorithm

Combine the power of language model and the collaberative filtering data

Introduction

Background

•Cold-start challenge in recommender systems (new users/items with no interaction history).

•Potential of Language Models (LMs): Generate semantic representations from textual metadata.

•Goal: Hybrid LM + Collaborative Filtering (CF) for zero-shot recommendations.

Objective

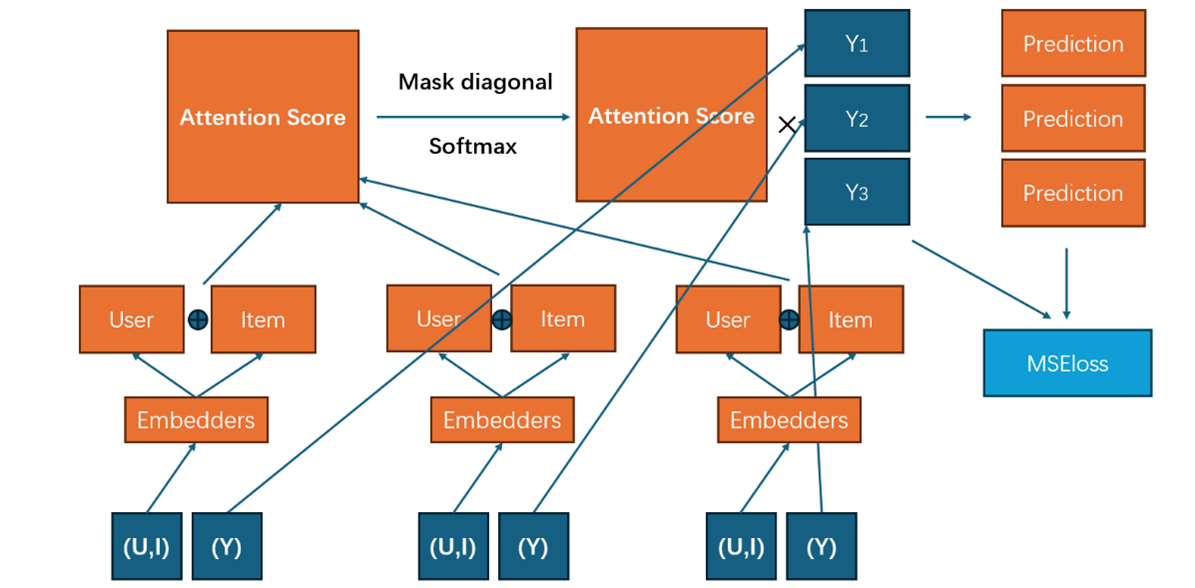

•Design high-performance CF architecture.

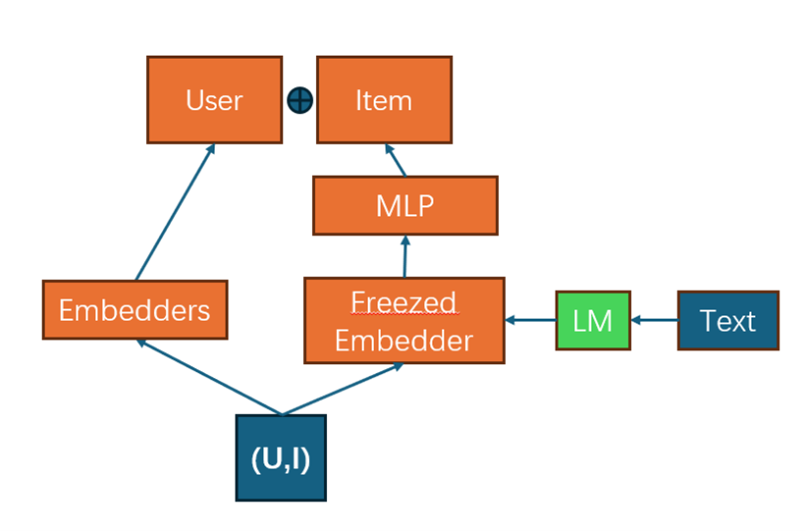

•Develop LM-CF integration framework.

•Optimize LM fine-tuning strategies

Contribution

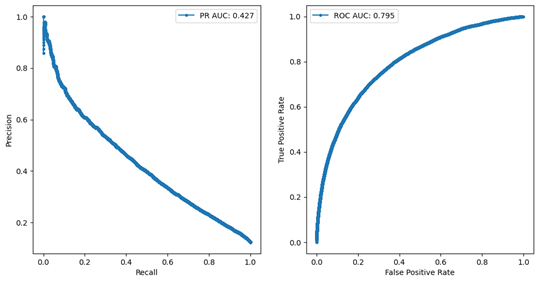

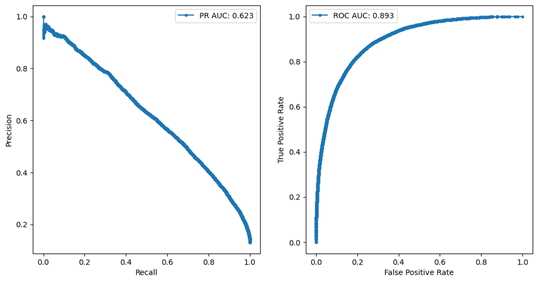

•Self-attention CF model (32% ROC-AUC and 87% PR-AUC improvement compared to cos-similarity model).

•Empirical validation of LM embeddings for cold-start scenarios.

•Decoupled finetuning framework for reduced computational costs.

Methodology

Our comprehensive suite of professional services caters to a diverse clientele, ranging from homeowners to commercial developers.

Self-Attention Model

Integrating with language model

- In dataset of this project, only item has text description

- Replace item embedder with LM embeddings

- User embedder remains unchanged

Evaluation

Metrics

•ROC-AUC (Receiver Operating Characteristic AUC):

Measures classification discriminative power across all thresholds.

Baseline = 0.5 (random prediction performance).

•PR-AUC (Precision-Recall AUC):

Focuses on precision-recall tradeoffs for positive class detection.

Baseline = 0.12 (equal to dataset sparsity with 12% interaction density).

Performance of Self-Attention Model for know items

Performance of LM integrated model for new items